Building PokéAgents: Personality-Driven AI Agents (Part 1)

What happens when you give AI agents distinct personalities? I built a system using Pokémon to find out.

Most AI agents today are boring. They're optimized, efficient, and somewhat predictable. Ask ChatGPT the same question ten times, and you'll get ten variations of the same response. There's no personality, no quirks and no authentic voice.

But what if AI agents had real personalities? What if they disagreed with each other, got frustrated, built confidence, and approached problems differently based on who they were?

I decided to find out by building a multi-agent system where Pokémon collaborate to solve tasks. Here's what I learned.

Why Pokémon Make Perfect AI Agents

Pokémon aren't just cute creatures - they're personality archetypes. Each one has distinct traits, motivations, and behavioral patterns that are immediately recognizable.

Think about it:

- Pikachu is energetic and inspiring but sometimes impulsive

- Alakazam is analytical and strategic but can overthink problems

- Machamp is action-oriented and direct but might rush solutions

- Chansey is nurturing and supportive but avoids conflict

These aren't random characteristics, they're consistent personality frameworks that affect how each Pokémon would approach any situation.

Perfect for testing whether personality actually matters in AI collaboration.

The Problem with Generic AI

Here's what happens when I give the following problem statement to a standard AI:

Help me choose a starter Pokémon

"Consider type effectiveness, movesets, and growth potential. Charmander offers strong offensive capabilities but requires careful training. Bulbasaur provides balanced stats and type advantages against early gym leaders..."

Technically correct. Completely soulless.

Now here's what happened when I gave four Pokémon personalities the same task:

Charmander: "Fire types dominate battles! You want power that grows stronger with every victory!"

Bulbasaur: "But what about a new trainer's experience level? Grass types are forgiving and teach good fundamentals."

Squirtle: "Safety first - Water types can handle unexpected situations and protect their trainer."

Pikachu: "Forget everything else! What starter will create the most amazing adventures and memories?"

Same question. Four completely different perspectives. And suddenly the "right" answer isn't that obvious anymore.

Building Personality Into Code

The technical challenge was making personality more than just different word choices. I needed agents that actually think differently based on who they are.

Here's how I did it:

Dynamic Personality States

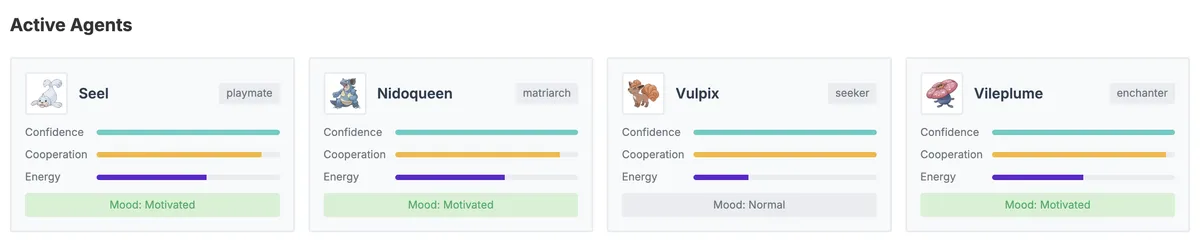

Each agent has four key attributes that change throughout conversations:

@dataclass

class AgentState:

confidence: float = 0.7 # How sure they are of their ideas

cooperation: float = 0.7 # Willingness to support others

energy: float = 1.0 # Capacity for participation

mood: str = "normal" # Emotional context

These aren't static traits — they evolve based on what happens. When Pikachu's idea gets supported by others, his confidence goes up. When Alakazam gets interrupted repeatedly, his mood shifts to "frustrated" and his responses become more critical.

This creates authentic personality changes that affect future decisions.

Smart Agent Selection

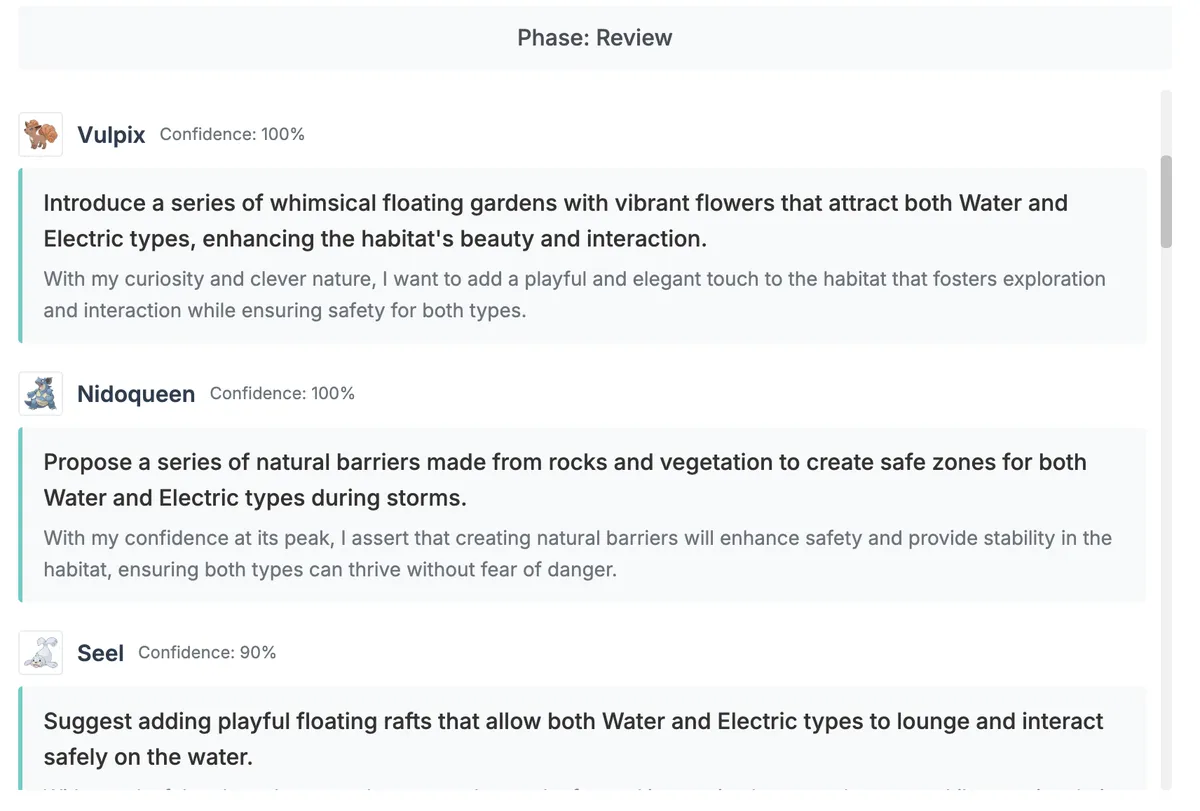

Instead of round-robin turns, I built a weighted selection system that considers both personality and context.

During brainstorming phases, creative personalities like "explorer" and "inspirer" get boosted selection weights. During planning phases, analytical types like "strategist" and "analyst" are more likely to speak.

As a result, natural conversation flows where the right personality speaks at the right time.

Personality-Driven Prompts

Here's the real magic. Instead of generic prompts, each agent gets context about their personality and current state:

You are Pikachu, known for being energetic and inspiring others.

Current state: frustrated (energy low from previous attempts)

Recent context: Alakazam just proposed a complex analytical solution

Based on your personality and current mood, how do you respond?

Same underlying AI model but completely different behavior based on who they think they are.

First Experiments: Surprising Results

I started with simple tasks to see if personality actually mattered. The results exceeded my expectations.

Experiment 1: Design a Pokémon Gym

I asked four agents to collaborate on designing a new Pokémon gym. Here's what emerged:

Turn 1 - Machamp (Warrior): "Electric gym with high-intensity battles! Fast-paced, aggressive, test trainer's reflexes!"

Turn 2 - Chansey (Nurturer): "What about newer trainers? Maybe start with basic Electric types and scale difficulty?"

Turn 3 - Alakazam (Analyst): "Optimal design requires considering trainer distribution. Statistical analysis shows..."

Turn 4 - Pikachu (Inspirer): "You're all missing the point! What makes a gym memorable? What story are we telling?"

Turn 5 - Machamp: "Pikachu's right about stories, but Chansey makes a good point about accessibility..."

Notice what happened? Machamp, the "warrior" personality, actually listened to others and modified his position. This wasn't programmed, it emerged from the personality dynamics.

By turn 12, they'd designed a gym that was challenging but accessible, with a compelling narrative theme, backed by solid statistical reasoning. No single agent would have created something so comprehensive.

Experiment 2: Emergency Response Planning

For a more complex test, I asked them to plan a rescue mission for Pokémon trapped in a collapsing cave.

The analytical agents immediately started calculating optimal routes and resource allocation. The action-oriented agents pushed for immediate deployment. The nurturing agents worried about psychological trauma for rescued Pokémon.

But here's what surprised me: the conflicts made the solution better.

When Alakazam proposed a "statistically optimal" route that would save the most Pokémon but leave some behind, Chansey's emotional objection forced the team to find creative alternatives. When Machamp wanted to charge in immediately, Squirtle's safety concerns led to better preparation.

The final plan was more thorough, more humane, and more practical than anything a single agent produced.

What I Learned About Personality in AI

Authenticity Creates Engagement

Users interacted differently with personality-driven agents. Instead of just reading outputs, they started anticipating responses. "What will Pikachu say about this?" became a natural thought.

The agents felt real in a way that surprised me. Not human, but authentically themselves.

Diversity Drives Better Solutions

Homogeneous teams reached consensus quickly but produced shallow solutions. Diverse personality teams took longer but consistently found insights that no single perspective revealed.

The disagreements weren't bugs — they were features. Conflict forced deeper exploration of problems.

Emergence is Real

Individual agents followed simple personality rules. But complex behaviors emerged from their interactions that I never explicitly programmed.

Agents started building on each other's ideas in sophisticated ways. Natural leadership patterns emerged based on task type and agent confidence. Some personality combinations developed clear working relationships.

The whole became genuinely greater than the sum of its parts.

Early Insights and Questions

After dozens of test runs, some patterns emerged:

Personality-driven agents weren't more efficient, but they were more trusted. Their outputs were preferred even when they took longer to reach conclusions.

Mixed personality teams consistently outperformed homogeneous teams on complex tasks, even though they had more conflicts.

Simple personality rules created complex collaborative behaviors that felt surprisingly sophisticated.

But this raised bigger questions:

- Can AI agents develop preferences for working with specific teammates?

- Do some personality combinations consistently work better together?

- What happens when agents learn from multiple collaborative experiences?

- Could personality diversity reduce AI bias in real-world applications?

What's Next

This is just the beginning. In Part 2, I'll explore what happens when these personality-driven agents start building relationships over multiple tasks. Do they develop trust? Can they learn each other's working styles? And what collaborative patterns emerge when AI agents begin forming genuine working partnerships?

The early results suggest we might be onto something important. Personality in AI isn't just about making agents more engaging — it might be a path toward more thoughtful, diverse, and ultimately better artificial intelligence.

Next up: Part 2 - "Emergent Relationships in AI" - How agents learn to work together over time